Introduction

The following article presents a map between modeling theory (model-driven development and model-driven SOA) and possible implementations with the next wave of Microsoft modeling technology, codename “Oslo.”

Microsoft has been disclosing much information about “Oslo” while delivering several Community Technology Previews (CTPs), or early betas. However, most of the information that is available on “Oslo” is very technology-focused (internal “M”-language implementation, SDK, and so on). This is why I want to present a higher-level approach. Basically, I want to discuss Why modeling, instead of only How.

Problem: Increase in SOA Complexity

What is the most important problem in IT? Is it languages, tools, programmers? Well, according to researchers and business users, it is software complexity. And this has been the main problem since computers were born. Application development is really a costly process, and software requirements are only increasing. Integration, availability, reliability, scalability, security, and integrity/compliancy are becoming more complicated issues, even as they become more critical. Most solutions today require the use of a collection of technologies, not only one. At the same time, the cost to maintain existing software is rising.

In many aspects, enterprise applications have evolved into something that is too complex to be really effective and agile.

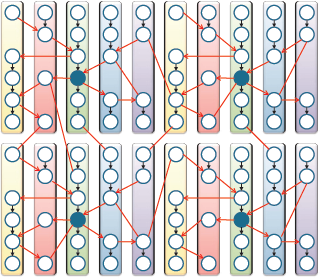

With regard to service-oriented architecture (SOA), when an organization has many connected services, the logical network can become extremely difficult to manage—something that is similar to what is shown in Figure 1, in which each circle would be a service and each box a whole application.

Figure 1. Point-to-point services network

The problem with this services network is that all of these services are directly connected; we have too many point-to-point connections between services. Using point-to-point services connections is fine, when we have only a few services; however, when the SOA complexity of our organization evolves and grows (having too many services), this approach is unmanageable.

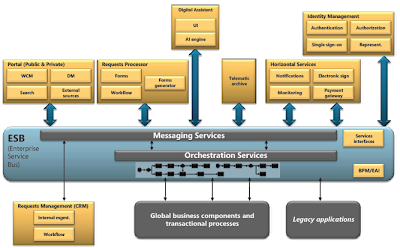

Sure, you will say that we can improve the previous model by using an enterprise-service-bus (ESB) approach and service-orchestration platforms, as I show in Figure 2. Even then, however, the complexity is very high: Implementation is based on a low level (programming in languages such as the Microsoft .NET languages and Java); therefore, its maintenance and evolution costs are quite high, although not as high as having non-SOA applications.

Figure 2. SOA architecture, based on ESB as central point (Click on the picture for a larger image)

SOA foundations are right; however, we must improve the work when we design, build, and maintain.

Does SOA Really Solve Complexity, and Is It Agile?

On the other hand, SOA has been the “promised land” and a main IT objective for a long time. There are many examples in SOA in which the theory is perfect, but its implementation is not. The reality is that there are too many obstacles and problems in the implementation of SOA. Interoperability among different platforms, as well as real autonomous services being consumed from unknown applications, really are headaches and slow processes.

SOA promised a functionality separation of a higher level than object-oriented programming and, especially, a much better decoupled components/services architecture that would decrease external complexity. Additionally, separation of services implementation from services orchestration results in subsystems being much more interchangeable during orchestration. The SOA theory seems correct.

But, what is the reality? In the experiences of most organizations, SOA in its pure essence has not properly worked out. Don’t get me wrong; services orientation has been very beneficial, in most situations—for instance, when using services to connect presentation layers to business layers, or even when connecting different services and applications.

However, when we talk about the spirit of SOA (such as the four tenets), the ultimate goals are the following:

In SOA, we can have many autonomous services independently evolving—without knowing who is going to use my services or how my services are going to be consumed—and those services should even be naturally connected.

I think that this is a different story. This theory has proven to be very difficult; SOA is not as agile or oriented toward business experts as organizations would like.

Is SOA Dead?

A few months ago, a question arose in many architectural forums. It probably was started by Anne Thomas Manes (Burton Group) in a blog post called “SOA Is Dead; Long Live Services.”

So, is SOA dead? I truly don’t think so. SOA foundations are completely necessary, and we have moved forward in aspects such as decoupling and interoperability (when compared with “separate worlds” such as CORBA and COM). So, don’t step back; I am convinced that service orientation is very beneficial to the industry.

The question and thoughts from Anne were really very interesting, and she really was shaking the architecture and IT communities. She did not really mean that service orientation is out of order; basically, what she said is the following:

“SOA was supposed to reduce costs and increase agility on a massive scale. Except in rare situations, SOA has failed to deliver its promised benefits... .

“Although the word ‘SOA’ is dead, the requirement for service-oriented architecture is stronger than ever.

“But perhaps that’s the challenge: The acronym got in the way. People forgot what SOA stands for. They were too wrapped up in silly technology debates (e.g., ‘What’s the best ESB?’ or ‘WS-* vs. REST’), and they missed the important stuff: architecture and services.”

So, I don’t believe that SOA is dead at all, nor do I think that Anne meant that. She was speaking out against the misused SOA word. Note that she even said that “the requirement for service-oriented architecture is stronger than ever.” The important points are the architecture and services. Overall, however, I think that we need something more. SOA must become much more agile.

Furthermore, at a business level, companies every day are requiring a shorter time to market (TTM). In theory, SOA was going to be the solution to that problem, as it promised flexibility and quick changes. But the reality is a bit sad—probably not because the SOA theory is wrong, but because the SOA implementation is far from being very agile. We still have a long way to go.

As a result of these SOA pain points, business people can often feel very confused.

As Gartner says, SOA pillars are right, but organizations are not measuring the time to achieve a return on investment (ROI). One of the reasons is that the business side really does not understand SOA.

To sum up, we need much more agility and an easier way to model our services and consumer applications. However, we will not achieve this until business experts have the capacity to verify directly and even model their business processes, services orchestration, or even each service. Nowadays, this is completely utopian. But who knows what will start happening in the near future?

Model-Driven SOA: Is That the Solution?

Ultimately, organizations must close the gap between IT and business by improving the communication and collaboration between them. The question is, “Who has to come up?” Probably, both. IT has to come closer to business and be much more accessible and friendly. At the same time, business experts have to reach a higher level to be able to leverage their knowledge more directly. They have to manipulate technology, but in a new way, because they still have to focus on the business; they do not have to learn how to program a service or application by using a low-level language such as C#, VB, or Java.

Someday, model-driven SOA might solve this problem. I am not talking only about services orchestration (by using platforms such as Microsoft BizTalk Server); I mean something else—a one-step-forward level—in which orchestration has to be managed not by technical people, but by business experts who have only a pinch of technical talent. This is something far from the context of today, in which we need technical people for most application changes. I mean a new point of view—one that is closer to the business side.

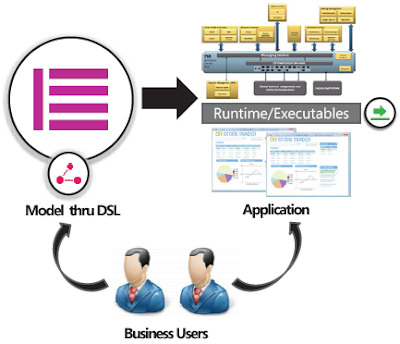

Model-driven SOA will have many advantages, although we will have to face many challenges. But the goal is to solve most typical SOA problems, because model-driven development (MDD) makes the following claim: “The model is the code.” (See Figure 3.)

Figure 3. MDD: “The model is the code.”

The advantages and claims of model-driven SOA are the following:

1. The model is the code. Neither compilation nor translation should be necessary. This is a great advantage over code generators and finally drives us to maintain/update the code directly.

2. Solutions that model-driven SOA creates will be compliant with SOA principles, because we are still relying on SOA. What we are changing is only the way in which we will create and orchestrate services.

3. Models have to be defined by business languages. At that very moment, we will have achieved our goal: to close the gap between IT and business, between CIO and CEO.

4. We will consequently get our desired flexibility and agility (promised by SOA, but rarely achieved nowadays) when most core business processes are transformed to the new model. When we use model-driven SOA, changes in business processes have to be made in the model; then, the software automatically changes its behavior. This is the required alignment between IT and business. This is the new promise, the new challenge.

One Objective with “Oslo”: Is “Model-Driven SOA” Possible?

Microsoft is actually building the foundations to achieve MDD through a base technology, codename “Oslo.” Microsoft has been disclosing what “Oslo” is since its very early stages, so as to get customer and partner feedback and create what organizations need.

Of course, there are many problems to solve. The most difficult is to achieve “Oslo” modeling-specific domains—reviewed and even executed by business experts, and at the same time producing interconnected executables between different models.

Basic Concepts of “Oslo”

“Oslo” is the code name for the forthcoming Microsoft modeling platform. Modeling is used across a wide range of domains; it allows more people to participate in application design and allows developers to write applications at a much higher level of abstraction.

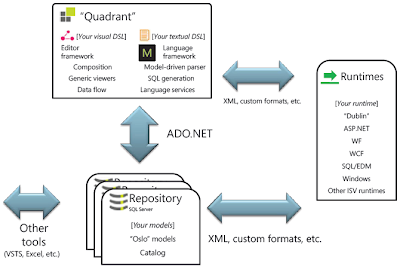

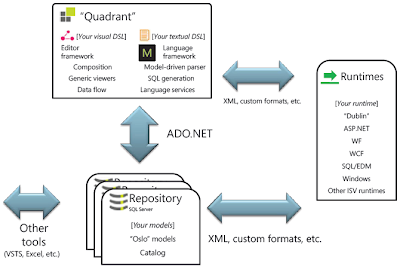

Figure 4. Architecture of “Oslo” and related runtimes (Click on the picture for a larger image)

So, what is “Oslo”? It consists of three main pillars:

* A language (or set of languages) that helps people create and use textual, domain-specific languages (DSLs) and data models. I am talking about the “M” language and its sublanguages (“M,” MGraph, MGrammar, and so on). By using these high-level languages, we can create our own textual DSLs.

* A tool that helps people define and interact with models in a rich and visual manner. The tool is called “Quadrant.” By using “Quadrant,” we will be able to work with any kind of visual DSL. This visual tool, by the way, is based on the “M” language as its foundation technology.

* A relational repository that makes models available to both tools and platform components. This is called simply the “Oslo” repository.

I am sure that Microsoft will be able to close the loop in anything that is related to those key parts (“M,” “Quadrant,” and the repository) for modeling metadata. In my opinion, however, the key part in this architecture is the runtime-integration with “Oslo,” such as integration with the next Microsoft application-server capabilities (codename “Dublin”), ASP.NET (Web development), Windows Communication Foundation (WCF), and Workflow Foundation (WF). This is the key for success in model-driven SOA (and MDD in general).

Will we get generated source code (C#/ VB.NET) or generated .NET MSIL assemblies? Will it be deployed through “Dublin”? Will it be so easy that business experts will be able to implement/change services? Those are the key points. And this is really a must, if “Oslo” wants to be successful in MDE/MDD.

In this regard, that is the vision of Bob Muglia (Senior Vice President, Microsoft Server & Tools Business), who promises that “Oslo” will be deeply integrated in the .NET platform (.NET runtimes):

“The benefits of modeling have always been clear; but, traditionally, only large enterprises have been able to take advantage of it, and [only] on a limited scale. We are making great strides in extending these benefits to a broader audience by focusing on [certain] areas. First, we are deeply integrating modeling into our core .NET platform. Second, on top of the platform, we build a very rich set of perspectives that help specific persons in the life cycle get involved.”

“Oslo” to Bring Great Benefits to the Arena of Development

In my opinion, it is good to target high goals. That is why we are trying to use MDD and MD-SOA to close the gap with business. However, even if we cannot reach our business-related goals, “Oslo” will bring many benefits to the development arena. In any case, we architects and developers will get a metadata-centric applications factory. To quote Don Box (Principal Architect in the “Oslo” product group):

“We’re building ‘Oslo’ to simplify the process of developing, deploying, and managing software. Our goal is to reduce the gap between the intention of the developer and the actual artifacts that get deployed and executed.

“Our goal is to make it possible to build real apps purely out of data. For some apps, we’ll succeed. For others, the goal is to make the transition to traditional code as natural as possible.”

MDD and “Oslo”

In the context of “Oslo,” MDD indicates a development process that revolves around the building of applications primarily through metadata. In fact, this is the evolution that we had for all development languages and platforms. In every new version of development platforms, we had more and more developmental metadata and less hardcoded/compiled code (consider WF, WPF, XAML, and even HTML). However, with MDD and “Oslo,” we are going several steps ahead— meaning that we are moving more of the definition of an application out of the world of code and into the world of data. As data, the application definition can be easily viewed and quickly edited in a variety of forms (even queried)—making all of the design and implementation details much more accessible. This is what the “Oslo” modeling technology is all about.

Figure 5. MDD with “Oslo” (Click on the picture for a larger image)

On the other hand, models (especially in “Oslo”) are relevant to the entire application life cycle. The term model-driven implies a level of intent and longevity for the data in question—a level of conscious design that bridges the gaps between design, development, deployment, and versioning.

For instance, Douglas Purdy (Product Unit Manager for “Oslo”) says the following:

“For me, personally, ‘Oslo’ is the first step in my vision to make everyone a programmer (even if they don’t know it).” (See Doug’s blog.)

This is really a key point, if we are talking about MDD; and, from Doug’s statement, I can see that the “Oslo” team supports it. Almost everyone should be able to model applications or SOA—especially business experts, who have knowledge about their business processes. This will provide real agility to application development.

Model-Driven SOA with “Oslo”

Now, we get to the point: model-driven SOA with “Oslo.”

Model-driven SOA is simply a special case within MDD/MDE. Therefore, a main goal within “Oslo” must be the ability to model SOA at a very high level—even for business experts (see Figure 5).

The key point in Figure 5 is the implementation and deployment of the model (the highlighted square that is on the right). “Oslo” must achieve this transparently. With regard to model-driven SOA (and MDD in general), the success of “Oslo” depends on having good support from base-technology runtimes (such as “Dublin,” ASP.NET, WCF, and WF) and, therefore, support and commitment from every base-technology product group.

Possible Scenarios in MD-SOA with “Oslo”

Keep in mind that the following scenarios are only my thoughts with regard to how I think we could use “Oslo” in the future to model SOA applications. These are neither official Microsoft statements nor promises; it is just how I think it could be—even how I wish it should be.

Modeling an Online Finance Solution

In the future, one of the key functions of “Oslo” will be to automate and articulate the agility that SOA promised. So, imagine that you are the chief executive of a large financial company. Your major competitor has started offering a new set of solutions to customers. You want to compete against that; but, instead of taking several months to study, analyze, and develop from scratch a new application by using new business logic, you can manage it in a few weeks—even only several days. By using a hypothetical SOA flavor of “Oslo,” your product team collects the needed services (which are currently available in your company), such as pricing and promotions, to create a new finance solution/ product.

Your delivery team rapidly assembles the services; your business experts can even verify the business processes in the same modeling tool that was used to compose the solution; and, finally, you present the new competitive product to the public.

The key in this MD-SOA process is to have the right infrastructure to support your business model, which should not be based on “dark code” that is supported and understood only by the “geek guys” (that is, the traditional development team). The software that supports your business model should be based on models that are aligned with business capabilities that bring value to the organization. These models are based also on standards—based on reusable Web services that are really your software composite components. These services should be granular enough for you to be able to reuse them in different business applications/services.

Also, your organization needs tools (such as a hypothetical SOA flavor of “Oslo”) to automate business-service models, even workflows—aligning those with new process models and composing existing or new ones to support the business process quickly. As I said, the key point will be how well-integrated “Oslo” will be with all of the plumbing runtimes (ASP.NET, WCF, .NET, and so on).

Finally, by using those model-driven tools, we could even deploy the whole solution to a future scalable infrastructure that is based on lower-level technologies such as “Dublin” (the next version of Microsoft application-server capabilities).

What Will “Oslo” Reach in the Arena of Model-Driven SOA?

The vision for model-driven SOA is that the software that supports your business model must be based on models and those models are aligned with business capabilities that bring value to the organization. Underneath, the models also must be aligned with SOA standards and interoperable, reusable Web services. Overall, however, business users must be able to play directly with those SOA models.

The question is not, “Will we be using Oslo?”, because I am sure that we architects and IT people will use it in many flavors, such as modeling data and metadata—perhaps, embedding “Oslo” in many other developer applications such as modeling UML and layer diagrams in the next version of Microsoft Visual Studio Team System (although not the 2010 version, which is still based on the DSL toolkit); modeling workflows; and modeling any custom DSL—all based on this mainstream modeling technology. However, when we talk about model-driven SOA, we are not talking about that. The key question is, “Will business-expert users be using ‘Oslo’ to model SOA or any application?”

If Microsoft is able to achieve this vision and its goals—taking into account the required huge support and integration among all of the technical runtimes and “Oslo” (the “Oslo” product team really has to get support from many different Microsoft product teams)—it could really be the start of a revolution in the applications-development field. It will be like changing from assembler and native code bits to high-level programming languages—even better, because, as Doug says, every person (business user) will be, in a certain way, a programmer, because business-expert users will be creating applications. Of course, this has to be a slow evolution toward the business, but “Oslo” could be the start.

Conclusion

SOA has to evolve toward a more agile design, development, and deployment process. Most of all, however, SOA must close the gap between IT and business.

Model-driven SOA can be the solution to these problems. So, with regard to MDD implementations, “Oslo” is Microsoft’s best bet to reach future paradigms for both MDD and model-driven SOA (a subset of MDD).

Find out more about Microsoft .NET outsourcing at the software outsourcing company website: www.symbyo.com

Source: The Architecture Journal